I’ll get the punchline in first. My thesis is that if you

measure your conversion-rate as:

Checkouts per visitor-minute spent on your site

then most of the differences in conversion rate between

device-types will evaporate, and you’ll discover that your mobile visitors

convert just as well as your PC and tablet visitors.

To be more precise, my proposal is that checkouts per quantum of useful information/content consumed is the

best measure of conversion; unfortunately this is very difficult to measure

directly, and visitor-minutes is a reasonable proxy.

OK, so now for the back-story, analysis, and a request for

some data-sharing.

I’m currently doing the research needed to bring my book ‘The

Multichannel Retail Handbook’ back up to date. Obviously one of the chapters

that most needs revising is the one on Mobile, because it’s an area that’s developed

extraordinarily quickly, even since I wrote the previous edition in 2012.

I was peacefully trotting out a draft including the received

wisdom that mobile visitors don’t convert as well as desktop or tablet

visitors. There’s plenty of published statistics demonstrating this, and

typically they quote a kind of 40:90:100 index for smart-phone:tablet:desktop

conversion rates. On a typical retailer’s website, this might translate as conversion

rates of 1.2%:2.7%:3.0% or something like that. Obviously it depends what you

sell and so forth. The blended use of the term “mobile” meaning both

smart-phone and tablet together makes me wince – the channels are totally

different in usage and behaviours - but a generic mobile conversion rate is

about 50 on the same index, or 1.5% as a percentage.

Nice charts make better books and articles, so I had a hunt

through the Google Consumer Barometer 2014, for some extra supporting data,

expecting it to tell me that people use their smart-phones for research, but

not so much for purchasing, which also seems to be received wisdom. The actual

data was rather confounding.

Google asks two questions, which I’ll paraphrase as:

Q1. Did you perform research in the last week on

your device?

Q2. Did you buy a product or service in the last week on your device?

Q2. Did you buy a product or service in the last week on your device?

Clearly they get a percentage of respondents that says yes

or no to each question. Where it gets rather interesting is if you express Q2

as a percentage of Q1. Essentially you are asking:

Q3. What % of people who researched online on a device also made a purchase online on that device, over the duration of a week?

Here are the results for a sample of countries:

In many countries – the UK, Germany, Japan, UAE and even

Russia on this chart – the differences between mobile and desktop customers

suddenly disappear. In most others they are much less than expected, and for China

specifically the (not wholly unexpected) suggestion is that mobile converts

better than desktop.

How, then, to reconcile this with the well-documented statistics

that show that there’s a big difference? The answer appears to lie in the structure

of the question. Notice that the Consumer Barometer asks about an extended time

period: a week. It does not ask about ephemeral visits, visitors, or page-views.

Depending which studies you believe, most purchases have a

consideration duration of somewhere between 2 days and 2 weeks, in countries

where the internet retailing is well-established and therefore online research

a prevalent shopper-behaviour. There’s a hint of some consistency here:

Consumer Barometer asks about a week, shoppers take about a week to decide.

What we seem to be seeing is that a shopper needs to consume

a consistent quantity of information

in order to make a purchase decision. The ratio we’d really like to study is

Checkouts per quantum of information consumed

but that isn’t terribly easy to measure, so we need to look

at some more accessible proxies for it.

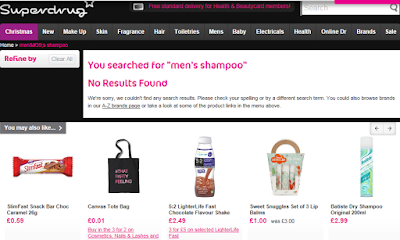

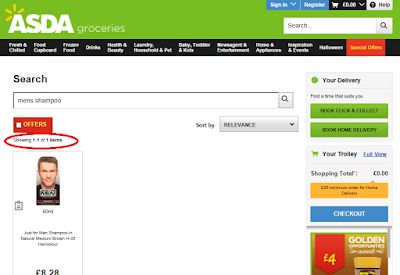

One possibility is page-views. This is often a KPI, or wrapped

into a more complex “engagement” KPI. But if you think about it in terms of quantity of information it’s unhelpful. A

page on a mobile site often contains much less information, and even if it

doesn’t, shoppers engage with mobile site pages differently. On desktop they

read the detail, on mobile they just read the headlines, and moreover they may read

in a much more interrupted environment such as while commuting.

Fortunately there’s a much better statistic:

session-duration. The first thing this does is bear out the headlines vs detail

idea. Here are the published session durations for the New York Times:

|

NY

Times Data

|

Avg

Pages per Visit

|

Avg Session

Duration

|

|

Mobile

|

2.55

|

1 min 43 secs

|

|

Desktop

|

3.58

|

17 mins 31 secs

|

(Data source: SimilarWeb/Clickz)

Much more importantly for a retailer, it also bears out the

idea that checkouts per visitor-minute

is a much better measure of the effectiveness of a given channel. Obtaining

publishable conversion data for retailers is pretty tricky. We can at least

test the hypothesis in print by considering session-duration data published for

Amazon against generic conversion-rate data.

|

|

Generic

Conversion Rate

|

Amazon

Avg Session Duration

|

% conversion

rate per minute session duration

|

|

Blended

Mobile

|

1.5%

|

4 mins 38 secs

|

0.32%

|

|

Desktop

|

3.0%

|

8 mins 35 secs

|

0.35%

|

(Data source for Amazon: SimilarWeb/Clickz)

As if by magic, the conversion

rate per visitor-minute is pretty

much consistent across both channels!

OK, one data point doesn’t make a case.

Privately I’ve tested this against various data that clients have shared with

me, and it does seem to stand up remarkably

well, but unfortunately of course I can’t publish such data points.

It seems to “feel” right though: the quantity of checkouts

you’ll get on a channel is proportional to the time (or more likely in reality,

information) customers engage with you and glean some information or data that’s

useful to them in what they’re doing. Viewed from the customer’s perspective, a

successful visit to a site – a “conversion” from their point of view – is one

where they succeed in finding out what they wanted to know.

They might do that staccato on mobile, gathering one bit of

data and then looking elsewhere. Or they might do it legato on desktop or

tablet, gathering multiple items in one trip. More likely they’re doing both, during the course of their decision-making process over a week or two.

There’s a probability that this particular item of information, gathered on

this particular visit, is the final piece in the jigsaw for them, and results

in a conversion from your point of view. The chances of that happening on a

given channel or device are proportional to the time-spent=information-gathered

on that channel.

If this idea is right, it’s potentially a very good way of

measuring the relative effectiveness of your user-experience by channel. Viewed

overall it tends to suggest that the stereotype that mobile doesn’t convert anywhere

nearly as well as desktop is simply wrong.

What would be really interesting is if there are sites out

there willing to share data that supports or challenges this. For those worried

about confidentiality, I’d simply note that you can use any arbitrary time-unit

and then not make public what that time-unit was, provided you use it consistently

across devices or channels, and then just report checkouts per internally

measured session-duration unit by device-type.